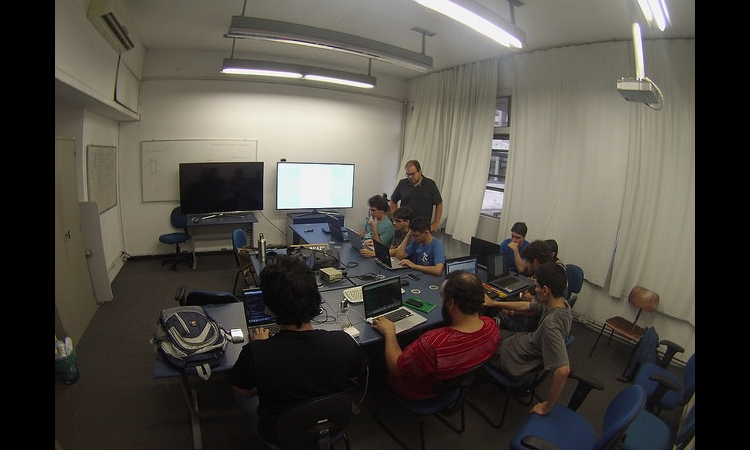

Trainer: Felice Pantaleo

Host Institute: UERJ, Rio de Janeiro

Duration: two weeks

Seminars

Introduction to Parallel Programming: motivations, architectures and algorithms.

During this first part the reasons why Computing Systems are becoming more and more parallel and heterogeneous were explained.

The concepts of Memory and Power walls were discussed along with the methods to mitigate their effects by reusing data, data locality and improving the memory access pattern.

Introduction to GPU Programming using CUDA

The aim of this series of lectures was to introduce through examples, based on the CUDA programming language, the three abstractions that make the foundations of GPU programming:

- Thread hierarchy

- Synchronization

- Memory hierarchy/Shared Memory

in order to give the students a solid foundation on which to start building their own first CUDA application running on a GPU device.

Load Balancing and Partitioning

The aim was to make the students understand the relationship between a domain problem and the computational models available on a parallel architecture.

Some techniques to reduce the Streaming Multiprocessors idle time by making use of dynamic scheduling and dynamic partitioning were shown.

Hands-on Session

The hands-on session focused on the following topics:

- GPU Memory management: allocation, data transfer between host and device, synchronization

- Kernel launch: offload of a parallel section to the GPU

- Partitioning of a problem to the GPU threads

- Profiling of a CUDA application

- Making use of the GPU shared memory

- Instruction Level Parallelism using Asynchronous operations and streams

- Reducing contention by privatization

- Scatter to gather

- Filling histograms on GPUs